- #Download spark maven how to#

- #Download spark maven install#

- #Download spark maven software#

- #Download spark maven Offline#

If you are local, you can load the model/pipeline from your local FileSystem, however, if you are in a cluster setup you need to put the model/pipeline on a distributed FileSystem such as HDFS, DBFS, S3, etc.Choosing the right model/pipeline is on you Since you are downloading and loading models/pipelines manually, this means Spark NLP is not downloading the most recent and compatible models/pipelines for you.

# you download this pipeline, extract it, and use PipelineModel # pipeline = PretrainedPipeline('explain_document_dl', lang='en')

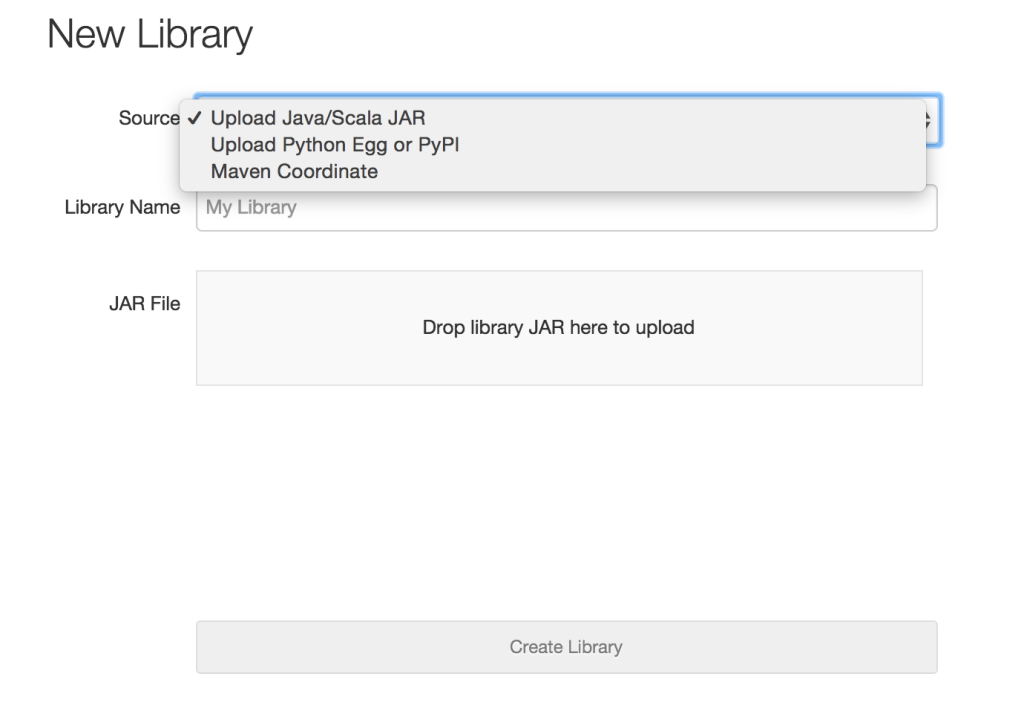

setOutputCol ( "pos" ) # example for pipelines # you download this model, extract it, and use. # french_pos = PerceptronModel.pretrained("pos_ud_gsd", lang="fr") # instead of using pretrained() for online: pretrained() function to download pretrained models, you will need to manually download your pipeline/model from Models Hub, extract it, and load it.Įxample of SparkSession with Fat JAR to have Spark NLP offline:

#Download spark maven Offline#

Spark NLP library and all the pre-trained models/pipelines can be used entirely offline with no access to the Internet.

#Download spark maven install#

#Download spark maven how to#

How to correctly install Spark NLP on Windows 8 and 10 In order to fully take advantage of Spark NLP on Windows (8 or 10), you need to setup/install Apache Spark, Apache Hadoop, and Java correctly by following the following instructions:

#Download spark maven software#

Sudo python3 -m pip install awscli boto spark-nlpĪ sample of your software configuration in JSON on S3 (must be public access): [ To lanuch EMR cluster with Apache Spark/PySpark and Spark NLP correctly you need to have bootstrap and software configuration.Ī sample of your bootstrap script #!/bin/bashĮcho -e 'export PYSPARK_PYTHON=/usr/bin/python3Įxport SPARK_JARS_DIR=/usr/lib/spark/jarsĮxport SPARK_HOME=/usr/lib/spark' > $HOME/.bashrc & source $HOME/.bashrc

If you are interested, there is a simple SBT project for Spark NLP to guide you on how to use it in your projects Spark NLP SBT Starter Spark-nlp on Apache Spark 3.0.x and 3.1.x: //

0 kommentar(er)

0 kommentar(er)